Text-to-Image models like Stable Diffusion struggle with negation as part of the prompt. We explore here why of it, draw parallels with human thought process and discuss technique that has been developed to deal with it.

- The Elephant in the Room

- The Elephant in Our Minds: Human Thought Processes

- The Elephant’s Digital Cousin: How LVMs See the World

- The Elephant’s Eraser: Negative Prompts to the Rescue

The Elephant in the Room

Picture this: You ask an AI to draw “anything but a green elephant,” because who wants a green elephant when they look perfectly gorgeous as they are. And what do you get? Lo and behold, a green elephant!

Let’s dive into the world of Language Vision Models (LVMs) and their struggle with negative instructions.

To illustrate this phenomenon, let us first see some images generated by the Stable Diffusion 3 model:

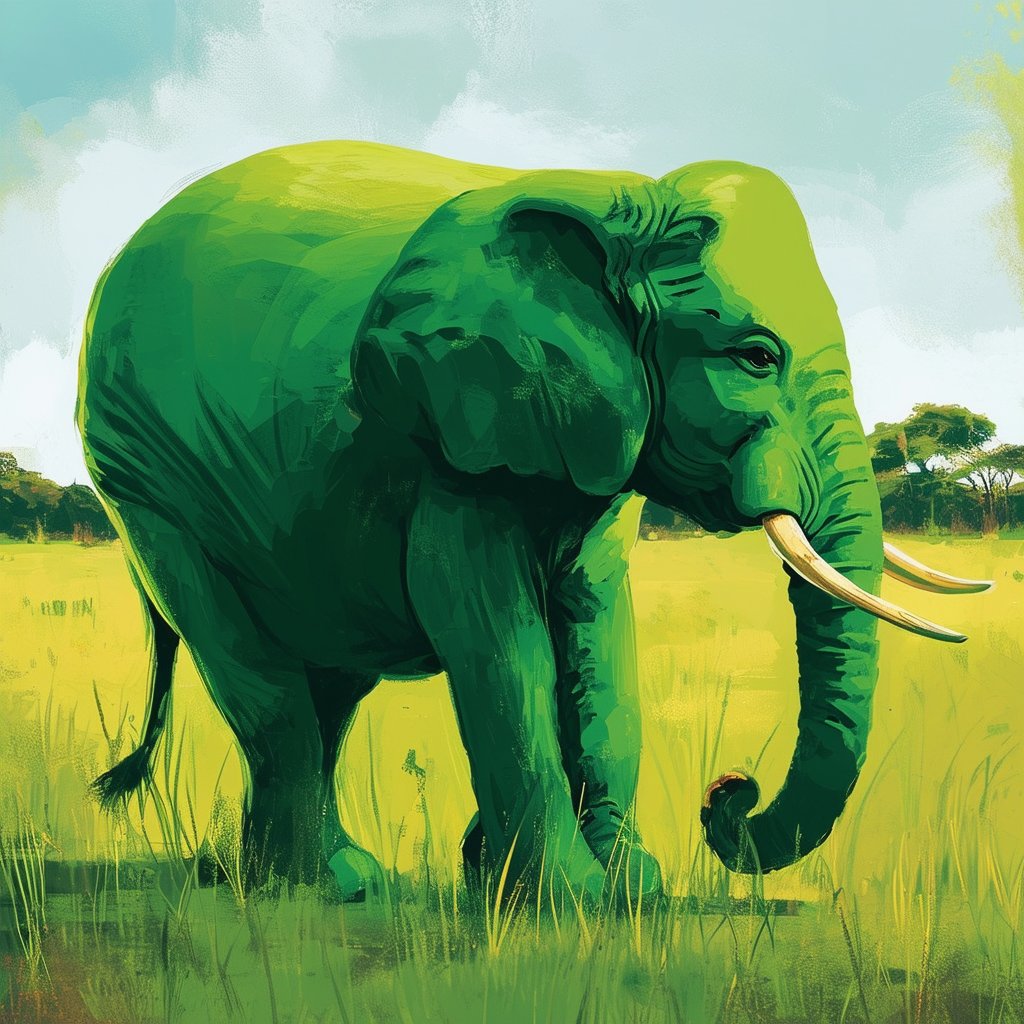

Prompt: "Don't think of a green elephant"

Prompt: "A human without teeth"

Prompt: "A human without hair walking on a non-snowy road"

Okay, third one did get it half-right. But you see the point though.

The Elephant in Our Minds: Human Thought Processes

If we think about it, it’s very similar to how humans behave. When asked not to think of a green elephant, we exactly do that too.

If I have to break the process down into individual steps, of how I personally do it, it would be like this:

- First, the mind scans for the major words in the sentence.

- Then, mind tries to fetch from one-to-many mapping, visuals, that these words might represent. Specifically, nouns are visualized first, then adjectives, and so on.

- And then, those visuals are combined with different degrees of variations or say weights, to form a coherent image.

So when someone says “don’t think of a green elephant,” my mind tries to pick up on nouns and adjectives, fetches the visuals that are mapped to the words from past experiences, and then combines them to create a coherent scenery.

Scenario 1: Sentence with Straight-Forward Cues

Take this sentence:

A bright red balloon floating gently in a clear blue sky.

I wouldn’t presume to know how every mind in the universe works, but if I have to describe it, it would be something like this:

- The mind immediately thinks of “red balloon” almost as if they are not two separate words but one.

- Then the next word mind goes to, is “sky.”

After the word balloon, mind sort of waits for the next cue. And it is not until the word sky, that it changes the already created scenery and adds to it.

Scenario 2: Sentence with Not So Straight-Forward Cues

In contrast, let’s think of the sentence:

Imagine the sound of a silent echo.

At most, what I can think of is an echo-chamber. Almost as if there is a gap in the mental map for words like sound, silent, and echo. A musician or an audio engineer might have a different opinion though. Discussion on this topic is a matter for another post.

Scenario 3: Sentence with Negative Cue

What I feel is more difficult to visualize, even for us as humans who are at the top of the cognitive pyramid, is the sentence that has a negative cue.

Don’t think of a green elephant.

By the time I hit the word “think”, it’s already BZZZZT in my mind. The brake was applied, but the train of thought fails to stop. It has already reached the green elephant station. A glorious green elephant stands in the middle of my mind.

What should I do now? Should I go back and delete it? Of course, I can delete the elephant from the mental scene. But LVMs do not have that luxury.

And maybe that gives a clue why LVMs struggle with negative instructions.

What Goes on in a Human’s Mind When They See a Negative Cue?

What happens in the case of a negative cue is that it is interpreted and appended to the scene after the scene has already been visualized. For example, if I am asked not to think of a red apple, I would visualize a red apple first and then append the negative cue to erase the apple from the scene.

The combination of word negations and their visual counterparts do not exist even for us humans, and there is always a bit of post-processing that happens to erase the visuals of the object from the scene.

The Elephant’s Digital Cousin: How LVMs See the World

While training, LVMs are shown a vast amount of data. And through the course of training, they associate words with their visual counterparts on the basis of their co-occurrence. It creates sort of a loose mapping between words and features of objects they have seen.

What LVMs lack is the mapping of negation of each of those words and their corresponding visual representations. This limitation comes from the fact that during training, LVMs are primarily exposed to positive associations between words and images. They learn to generate images based on what should be present, rather than what should be absent.

The sequential nature of text processing in these models means that by the time a negative instruction is processed, the model may have already activated the visual features associated with the negated concept. Unlike us humans, who can consciously suppress and modify mental images, LVMs lack this flexible, top-down control over their generative process.

For LMs to work with negations, they need to be trained with a lot of negation data. But that’s quite a bit of a challenge. There are so many questions to be answered for that.

- How would the negation data look like?

- Do we go about labeling all the images not containing balloons as not-balloons?

- In the case of the instruction “Don’t create a balloon,” what should the model create? Should it create an empty space? Is that even a valid instruction?

- What is the good way to include negation data in the training?

There is no good way.

The Elephant’s Eraser: Negative Prompts to the Rescue

And so comes the idea of negative prompts. It is an auxiliary optional input prompt that goes with the primary prompt where we can mention something that we don’t want the model to generate.

It is a nice way of moving the model away from a feature space that belongs to the things we don’t want them to generate.

Practically, what it does is it subtracts the embeddings of the negative prompt text from the embeddings of the positive prompt text.

Here’s a simplified example of how it works internally:

1 | positive_prompt_embedding = get_embedding("A human") |

And what happens when these negative prompts are applied to the model? Here are some of the results generated by the Stable Diffusion 3 model with the negative prompts:

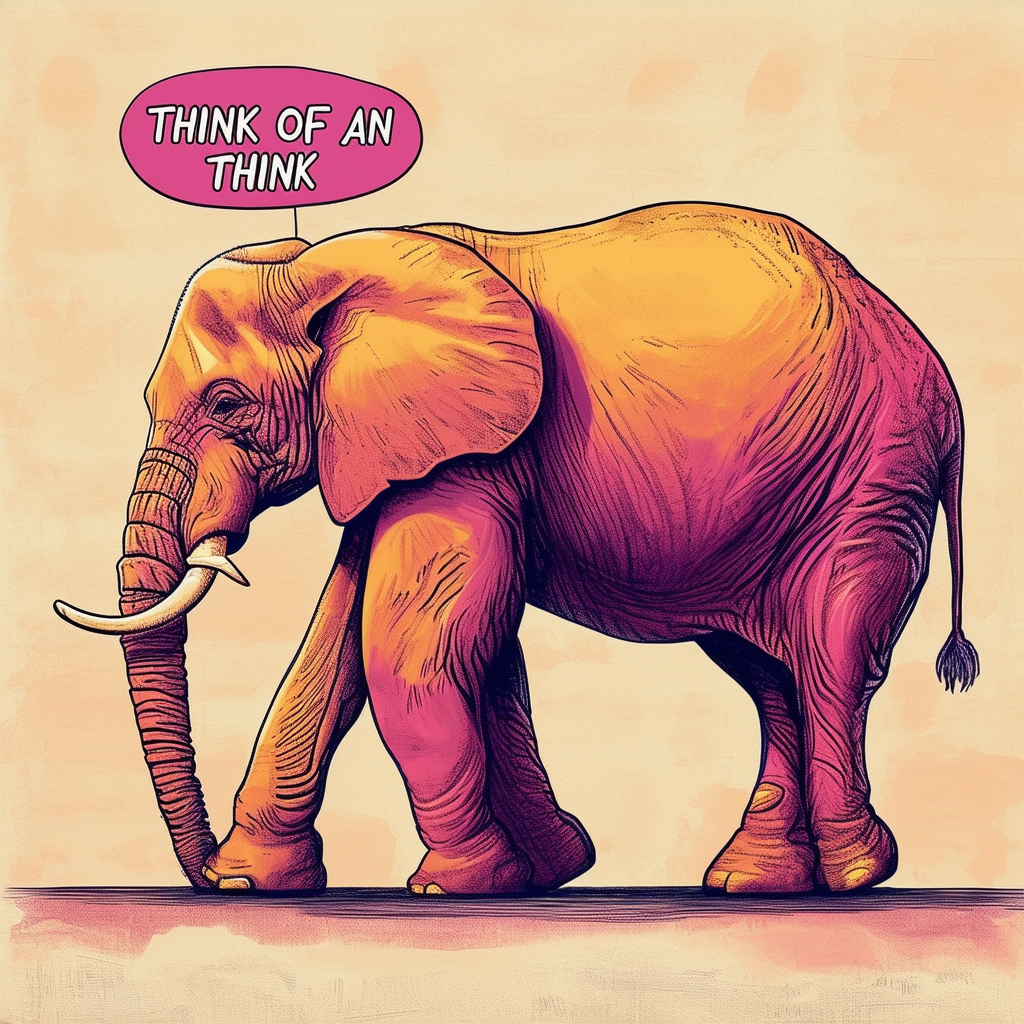

Prompt: "Think of an elephant"

Negative Prompt: "green"

Prompt: "A human"

Negative Prompt: "teeth"

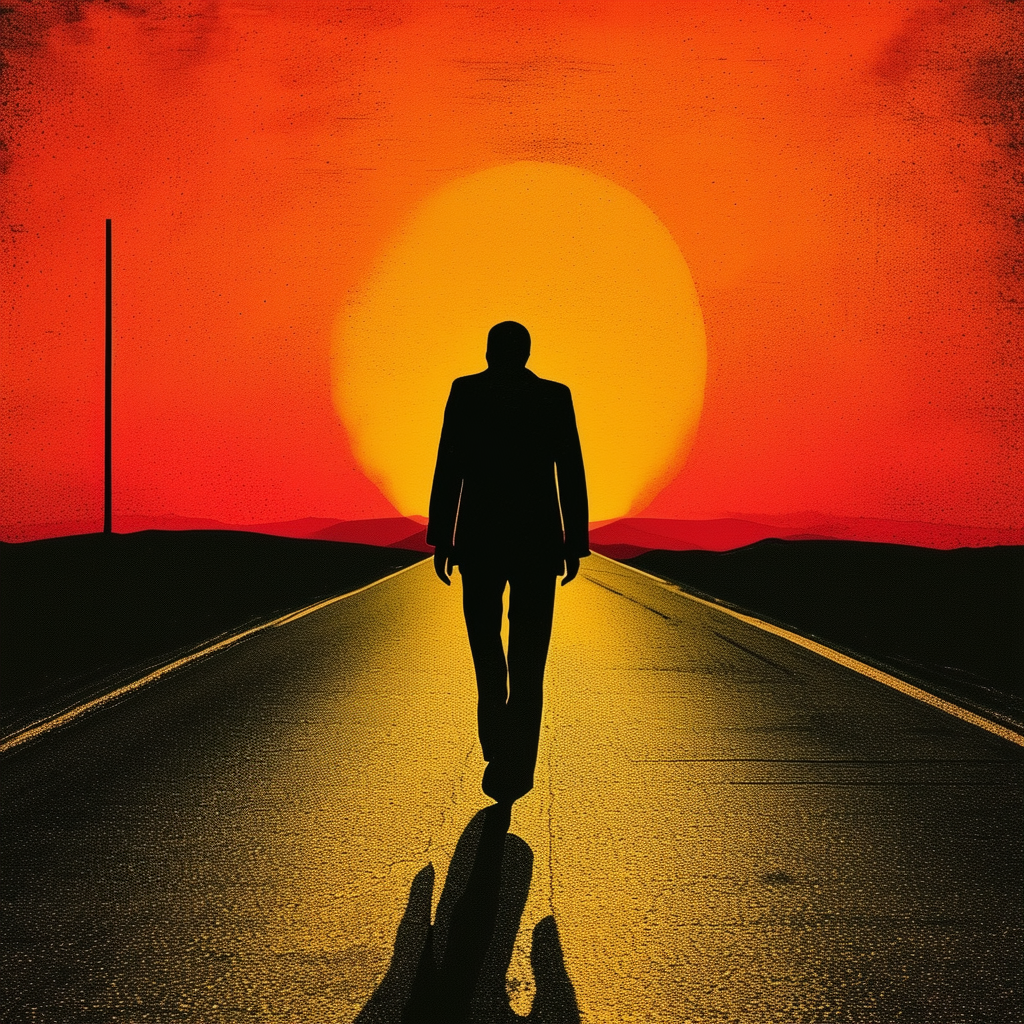

Prompt: "A human walking on a road"

Negative Prompt: "hair, snow"

In above cases, the prompt in itself would have been enough to generate these images, but the negative prompts help the model move further away from the features that we don’t want the model to generate.

If you found this article useful, please cite it as:

Chaturvedi, Pranav. (June 2024). Don’t Think of a Green Elephant. https://pranavchat.com/lvms/2024/06/29/dont-think-of-a-green-elephant/

or

1 | @article{chaturvedi2024elephant, |

Attributions

Stable Diffusion 3. Response to “Don’t think of a green elephant”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion

Stable Diffusion 3. Response to “A human without teeth”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion

Stable Diffusion 3. Response to “A human without hair walking on a non-snowy road”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion

Stable Diffusion 3. Response to “Think of an elephant” with negative prompt “green”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion

Stable Diffusion 3. Response to “A human” with negative prompt “teeth”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion

Stable Diffusion 3. Response to “A human walking on a road” with negative prompt “hair, snow”. AI-generated image. STABILITY AI LTD, 2024. Stable Diffusion